环境准备

Docker安装

安装依赖

1 | sudo apt update |

导入存储库的GPG密钥

1 | curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - |

添加Docker APT存储库到系统

1 | sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" |

安装Docker

1 | sudo apt update |

docker版本:20.10.12

查看docker服务启动状况

1 | root@ubuntu:~# systemctl status docker |

Kubernetes安装

安装https工具使得apt支持ssl传输

1 | apt-get update && apt-get install -y apt-transport-https |

使用阿里云的源

1 | curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - |

或使用中科大的源

1 | cat <<EOF > /etc/apt/sources.list.d/kubernetes.list |

更新apt报错如下

1 | The following signatures couldn't be verified because the public key is not available: NO_PUBKEY FEEA9169307EA071 NO_PUBKEY 8B57C5C2836F4BEB |

报错提示我们需要制作一个key,其中836F4BEB是NO_PUBKEY的后八位

1 | gpg --keyserver keyserver.ubuntu.com --recv-keys 836F4BEB |

之后重新apt-get update即可

下载相关工具

修改docker的daemon.json,将cgroup驱动和k8s设置为一致

1 | root@ubuntu1:~# cat /etc/docker/daemon.json |

1 | apt-get update |

查看k8s版本

1 | root@ubuntu:~# kubectl version |

初始化master节点

1 | kubeadm init \ |

配置kubectl工具

1 | mkdir -p $HOME/.kube |

查看节点状态

1 | root@ubuntu:~# kubectl get nodes |

状态显示为NotReady,查看日志,发现没有安装网络插件

1 | root@ubuntu:~# journalctl -u kubelet -f |

安装pod插件flannel

1 | root@ubuntu:~# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml |

再次查看节点状态

1 | root@ubuntu:~# kubectl get nodes |

允许master部署pod

1 | kubectl taint nodes --all node-role.kubernetes.io/master- |

服务网格

服务网格是一个专注于处理服务间通信的基础设施层,它负责在现代云原生应用组成的复杂网络拓扑中可靠的传递请求

服务网格特点

- 轻量级的网络代理

- 应用无感知

- 应用之间的流量由服务网格接管

- 服务间的调用可能出现的超时、重试、监控、追踪等工作下沉到服务网格层处理

网格一般由数据平面和控制平面组成,数据平面负责在服务中部署一个sidecar的请求代理,控制平面负责请求代理之间的交互,以及用户与请求代理的交互。

Istio

通过负载均衡、service-to-service身份验证、监视等方法,Istio可以轻松地创建部署服务网格,而服务代码更改很少或没有更改,我们可以在整个环境中部署一个特殊的sidecar代理来为服务添加Istio支持,该代理可以拦截微服务之间的所有网络通信,然后使用其控制平面功能来配置和管理Istio,其中包括:

- HTTP、gRPC、WebSocket和TCP流量的自动负载平衡

- 使用丰富的路由规则、重试、故障转移和故障注入对流量欣慰进行细粒度控制

- 支持访问控制、速率限制和配额的可插拔策略层和配置API

- 集群内所有流量的自动度量、日志和跟踪,包括集群入口和出口

- 在具有强大的基于身份的身份验证和授权的集群中实现安全的服务到服务通信

Istio的核心功能

流量管理

Istio的简单规则配置和流量路由允许控制服务之间的流量和API调用流,Istio简化了服务级属性(如断路器,超时和重试)的配置,并且简化了设置重要任务(如A/B测试,金丝雀测试和按百分比划分的分阶段测试)的工作。有了过呢好的流量可视性和开箱即用故障恢复功能,可以在问题产生之前捕获问题,使调用更可靠,网络更健壮。

安全

Istio的安全功能使开发人员可以专注于应用程序级别的安全。Istio提供了底层的安全通信通道,并按比例管理服务通信的身份验证、授权和加密。通过Istio,服务通信在缺省情况下是安全的。允许在不同的协议和运行时之间一致地实施策略。

观察

Isio的见状跟踪、监视和日志功能使得我们可以更加深入了解服务网格部署。通过Istio的监视功能,可以真正理解服务性能如何影响上游和下游的事情。而它的自定义仪表板提供了对所有服务的性能的可见性。

安装Istio

安装文档地址:https://istio.io/latest/docs/setup/getting-started/

下载1.11.6版本

1 | curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.11.6 sh - |

进入到下载目录

1 | cd istio-1.11.6/ |

添加istioctl客户端到路径

1 | export PATH=$PWD/bin:$PATH |

查看Istio部署模式

1 | root@ubuntu:~/istio-1.11.6# istioctl profile list |

设置部署模式为demo

1 | istioctl manifest apply --set profile=demo |

添加命名空间的标签

1 | kubectl label namespace default istio-injection=enabled |

部署案例应用

部署bookinfo案例

1 | root@ubuntu:~/istio-1.11.6# kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml |

查看pod情况

1 | root@ubuntu:~/istio-1.11.6# kubectl get pods |

检查运行是否正常

1 | root@ubuntu:~/istio-1.11.6# kubectl exec "$(kubectl get pod -l app=ratings -o jsonpath='{.items[0].metadata.name}')" -c ratings -- curl -sS productpage:9080/productpage | grep -o "<title>.*</title>" |

开启外部访问

关联Istio网关

1 | kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml |

查看服务外部访问方式

1 | root@ubuntu:~/istio-1.11.6# kubectl get svc istio-ingressgateway -n istio-system |

修改访问方式为NodePort

1 | kubectl edit svc istio-ingressgateway -n istio-system |

访问测试

1 | root@ubuntu:~/istio-1.11.6# curl 192.168.19.85:31356/productpage |

ebpf加速ServiceMesh实验

代码地址:https://github.com/merbridge/merbridge

升级内核

实验要求内核版本>=5.7,首先我们还是通过命令查询指定版本的Linux镜像包,发现没有找到可用的版本

1 | root@ubuntu:~# apt-cache search linux| grep 5.8 |

因此我们直接去官方下载

地址:https://kernel.ubuntu.com/~kernel-ppa/mainline/

1 | wget -c https://kernel.ubuntu.com/~kernel-ppa/mainline/v5.8/amd64/linux-headers-5.8.0-050800-generic_5.8.0-050800.202008022230_amd64.deb |

安装内核Deb软件包

1 | root@ubuntu:~# sudo dpkg -i *.deb |

安装结束后,重新启动系统后查看内核版本

1 | root@ubuntu:~# uname -r |

相关版本说明

1 | root@ubuntu:~# docker version |

yaml文件apply之前的ebpf数据

列出系统中所有cgroup上的附加程序

1 | root@ubuntu:~# bpftool cgroup tree |

查看系统中已经加载的所有BPF程序

1 | root@ubuntu:~# bpftool prog show |

merbridge安装

1 | root@ubuntu:~# kubectl apply -f https://raw.githubusercontent.com/merbridge/merbridge/main/deploy/all-in-one.yaml |

1 | root@ubuntu:~# kubectl get pods -n istio-system |

1 | root@ubuntu:~# kubectl get pods -n istio-system |

再次查看系统中所有 cgroup 上的附加程序

1 | root@ubuntu:~# bpftool cgroup tree |

再次查看系统中已经加载的所有 BPF 程序

1 | root@ubuntu:~# bpftool prog show |

发现ebpf程序已经成功加载进内核

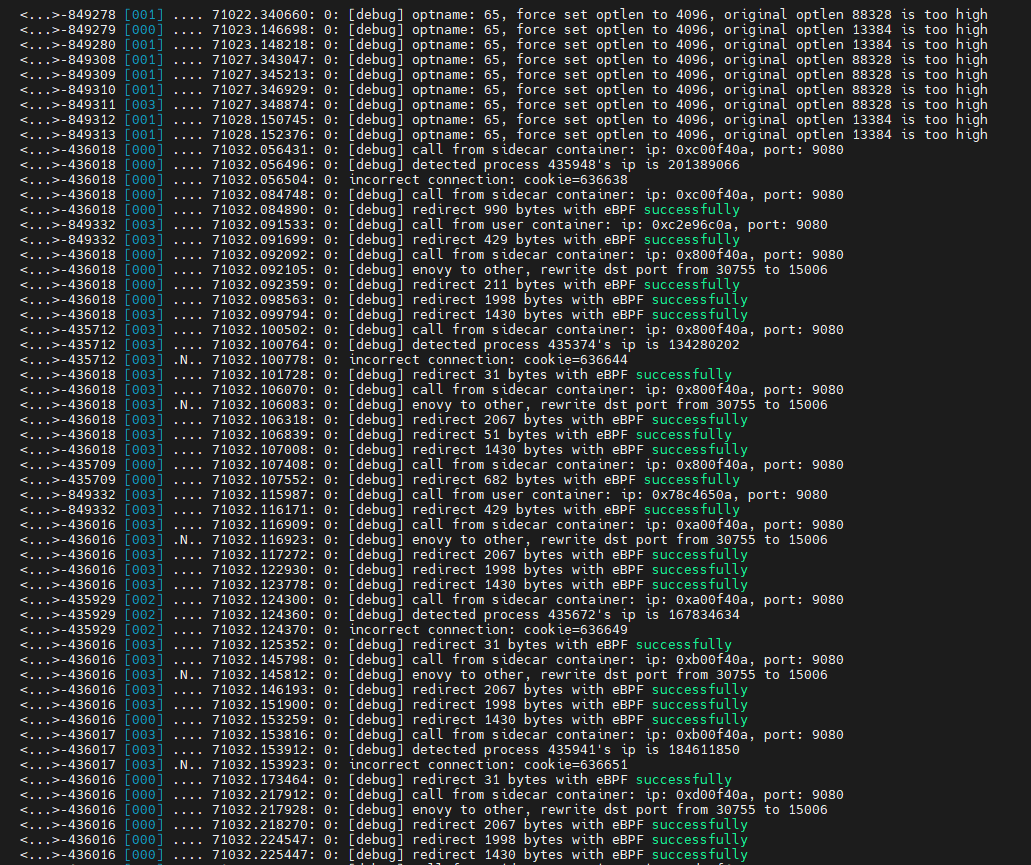

确认ebpf程序生效

yaml文件开启debug模式

打开日志追踪

1 | echo 1 > /sys/kernel/debug/tracing/tracing_on |

再次访问192.168.19.84:31356/productpage

使用cat /sys/kernel/debug/tracing/trace_pipe查看输出

tps测试如下

其中85是没有部署merbridge的,即没有通过ebpf加速。84是经过ebpf加速的,可以看到经过ebpf加速之后tps增加了一倍。

1 | root@ubuntu:~/wrk-master# ./wrk -c1000 --latency http://192.168.19.85:31356/productpage |

集群测试

集群测试分为两组

- 没有经过merbridge加速:

192.168.19.85和192.168.19.83 - 经过merbridge加速:

192.168.19.84和192.168.19.82

master节点分别是192.168.19.85和192.168.19.84

slave节点配置

1.安装docker和k8s工具,这里不再赘述

2.将从节点加入主节点

主节点查看令牌,没有则需要创建令牌

1 | root@ubuntu:~# kubeadm token list |

1 | root@ubuntu:~# kubeadm token create |

如果没有 --discovery-token-ca-cert-hash 的值,则可以通过在控制平面节点上执行以下命令来获取

1 | root@ubuntu:~# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \ |

3.从节点执行kubeadm join命令

1 | root@ubuntu1:/etc/kubernetes# swapoff -a |

4.主节点查看nodes情况

1 | root@ubuntu:/etc/kubernetes# kubectl get nodes |

5.查看pod的分布情况

192.168.19.85

1 | root@ubuntu:~# kubectl get pod --all-namespaces -o wide |

192.168.19.84

1 | root@ubuntu:~# kubectl get pod --all-namespaces -o wide |

外部向pod发送请求

集群内

在node为192.168.19.85的机器上向node为192.168.19.83机器上的pod发送请求(没有merbridge加速)

1 | root@ubuntu:~# wrk -c1000 --latency http://192.168.19.83:31356/productpage |

在node为192.168.19.84的机器上向node为192.168.19.82机器上的pod发送请求(有merbridge加速)

1 | root@ubuntu:~# wrk -c1000 --latency http://192.168.19.82:31356/productpage |

集群间

在node为192.168.19.85的机器上向node为192.168.19.84机器上的pod发送请求(其中84上部署了merbridge加速)

1 | root@ubuntu:~# wrk -c1000 --latency http://192.168.19.84:31356/productpage |

在node为192.168.19.84的机器上向node为192.168.19.85机器上的pod发送请求(其中85没有部署merbridge加速)

1 | root@ubuntu:~# wrk -c1000 --latency http://192.168.19.85:31356/productpage |

同一node下pod间发送请求

pod内安装wrk

进入pod

1 | kubectl exec -it <pod-name> /bin/sh |

安装wrk压测工具发现无法执行命令

1 | / $ ls |

解决办法:https://stackoverflow.com/questions/45142855/bin-sh-apt-get-not-found

通过docker以root身份进入容器

1 | docker exec -it --user=root <CONTAINER ID> /bin/sh |

使用apk命令安装

1 | / # apk update |

在wrk目录下执行make

1 | /tmp/wrk-master # make |

在名为sleep-698cfc4445-k8ncb的pod下,对名为helloworld-v1-fdb8c8c58-gh4sf的pod发起请求。这两个pod同属于192.168.19.83节点,在该node下没有部署merbridge加速

1 | /tmp/wrk-master # wrk -c10000 --latency http://10.101.180.145:5000 |

在名为sleep-698cfc4445-qncjl的pod下,对名为helloworld-v1-fdb8c8c58-28pm4的pod发起请求。这两个pod同属于192.168.19.82节点,在该node部署了merbridge加速

1 | /tmp/wrk-master # wrk -c10000 --latency http://10.101.187.77:5000 |

不同node下pod间发送请求

在node为192.168.19.83名为sleep-698cfc4445-k8ncb的pod下,对node为192.168.19.85名为productpage-v1-65b75f6885-p6k2w的pod发起请求。这两个pod不属于同一个node下,在该集群下没有部署merbridge加速

1 | / $ wrk -c1000 --latency http://192.168.19.85:31356/productpage |

在node为192.168.19.82名为sleep-698cfc4445-qncjl的pod下,对node为192.168.19.84名为productpage-v1-65b75f6885-kt88j的pod发起请求。这两个pod不属于同一个node下,在该集群下部署了merbridge加速

1 | / $ wrk -c1000 --latency http://192.168.19.84:31356/productpage |

拓展:从控制平面节点以外的计算机控制集群

从节点查看pod报错如下

1 | root@ubuntu:~# kubectl get pod |

出现这个问题的原因是kubectl命令需要使用kubernetes-admin来运行,解决方法如下,将主节点中的/etc/kubernetes/admin.conf文件拷贝到从节点相同目录下,然后配置环境变量

1 | root@ubuntu:~# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile |

再次查看pod

1 | root@ubuntu:~# kubectl get pods |

merbridge yaml文件解析(istio)

https://github.com/merbridge/merbridge/blob/main/deploy/all-in-one.yaml

第一段,创建对象类别,这里是集群角色

1 | apiVersion: rbac.authorization.k8s.io/v1 #创建该对象所使用的 Kubernetes API 的版本 |

第二段,在集群范围执行授权,这里对集群角色权限进行绑定

1 | apiVersion: rbac.authorization.k8s.io/v1 |

第三段,为pod指定服务账户,命名空间为istio-system

1 | apiVersion: v1 |

第四段创建DaemonSet类型的pod。我们将内容拆为两部分说明

initContainers

首先我们看一下initContainers的挂载卷,需要说明的是,使用卷时, 在 .spec.volumes 字段中设置为 Pod 提供的卷,并在 .spec.containers[*].volumeMounts 字段中声明卷在容器中的挂载位置。因此我们先看一下.spec.volumes字段,如下所示

1 | volumes: |

这里用到了两种存储卷类型,分别是hostPath和emptyDir。对于hostPath类型,会映射node文件系统中的文件或者目录到pod里。而对于emptyDir类型,K8s会在Node上自动分配一个目录,因此无需指定宿主机Node上对应的目录文件。

接着我们回到initContainers中。看到initContainers有两个mountPath。用到了.spec.volumes下的host-ips和host-proc,挂载路径为容器中的/host/ips和/host/proc

1 | initContainers: #Init容器是一种特殊容器,在Pod内的应用容器启动之前运行 |

挂载完成后,我们看一下执行参数。这里用到了nsenter命令。nsenter命令是一个可以在指定进程的命令空间下运行指定程序的命令。它位于util-linux包中。

具体使用可参考如下连接:https://juejin.cn/post/7038531145113452581--net进入net命令空间,并指定了文件的命令空间。nsenter --net=/host/proc/1/ns/net ip -o addr命令可以查看主机的ip地址信息。在主机上测试如下

1 | root@ubuntu:~# nsenter --net=/proc/1/ns/net ip -o addr |

这里有人可能会想为什么不直接使用ip -o addr呢,从下面结果看到这两条命令的执行结果是一样的。

1 | root@ubuntu:~# ip -o addr | awk '{print $4}' |

需要提一点的是,nsenter命令一个最典型的用途就是进入容器的网络命令空间。相当多的容器为了轻量级,是不包含较为基础的命令的,比如说ip address,ping,telnet,ss,tcpdump等等命令,这就给调试容器网络带来相当大的困扰。

awk '{print $4}',这句命令是对每行按照空格或TAB分割,输出第四项

awk用法:https://www.runoob.com/linux/linux-comm-awk.html

主机测试如下

1 | root@ubuntu:~# nsenter --net=/proc/1/ns/net ip -o addr | awk '{print $4}' |

之后通过tee命令将结果写入到/host/ips/ips.txt中。

从这里我们就可以看到,初始化容器的作用就是获取主机的ip地址信息,并将结果存入到ips.txt中。 Init 容器初始化完毕后就会自动终止,但是 Init 容器初始化结果会保留到应用容器和sidecar容器中。

containers

containers和初始化容器的镜像是相同的。containers中也有两个mountPath。用到了.spec.volumes下的host-ips和sys-fs,挂载路径为容器中的/host/ips和/sys/fs,通过securityContext定义了容器需要特权模式运行。

1 | containers: |

看一下该容器中的执行参数。通过源码我们看到-m是服务网格的模式,当前所支持的是istio和linkerd。这里我们使用的是istio,--ips-file是当前节点的ip信息的文件名,即在initContainers中我们将ip信息写入的路径/host/ips/ips.txt。

lifecycle字段是管理容器在运行前和关闭前的一些动作。其中preStop是容器被终止前的任务,用于优雅关闭应用程序、通知其他系统。这里在容器被终止前执行make clean用于清除之前编译的可执行文件及配置文件。

第五段,pod相应策略

1 | dnsPolicy: ClusterFirst #针对每个Pod设置DNS的策略,ClusterFirst为默认配置 |

eBPF程序分析

helpers.h

1 |

|

maps.h

1 |

|

mb_bind.c

劫持 bind 系统调用并修改地址。目前该项目支持Istio和linkerd,mb_bind.c程序会判断mesh的类型是否为linkerd,如果是会将监听地址从127.0.0.1:4140变为0.0.0.0:4140,4140端口是linkerd的出站流量重定向端口。

在mb_connect.c中,作者为了避免四元组产生冲突,将目的地址修改为127.x.y.z而不是127.0.0.1,而在linkerd源码中是不允许修改的,如下图所示

针对该代码的具体细则可参考链接:https://github.com/linkerd/linkerd2-proxy/pull/1442

1 |

|

mb_connect.c

劫持connect系统调用

1 |

|

mb_get_sockopts.c

1 |

|

mb_redir.c

1 |

|

bpf_msg_redirect_hash参数解析

1 | bpf_msg_redirect_hash(msg, &sock_pair_map, &p, 0); |

- msg:用户可访问的待发送数据的元信息

- sock_pair_map:这个BPF程序attach到的

sockhash map - p:在

map中索引用的key - 0:

BPF_F_INGRESS,放到对端的哪个queue

mb_sockops.c

1 |

|

启用cgroupv2产生的问题

如何启动cgroupv2

调整grub linux内核引导参数

1 | sudo vim /etc/default/grub |

修改GRUB_CMDLINE_LINUX为systemd.unified_cgroup_hierarchy=1

更新grub并重启

1 | sudo update-grub |

判断是否启用cgroupv2

1 | root@ubuntu:~$ cat /sys/fs/cgroup/cgroup.controllers |

在没有启用cgroupv2时,拉取merbridge镜像之后,执行docker run指令会报如下错误

1 | root@ubuntu:~# docker run -it --privileged 605389bb6641 |

启用cgroupv2之后,docker run执行正常,可是k8s运行yaml会失败,查看pod报错如下

1 | root@ubuntu:~# kubectl get pods -n istio-system |

最后发现,cgroup v2 是单一层级树,因此只有一个挂载点即/sys/fs/cgroup/unified

https://github.com/merbridge/merbridge/issues/60

iptables注入解析

查看productpage pod的istio-proxy容器中的进程

1 | root@ubuntu:~# docker top `docker ps|grep "istio-proxy_productpage"|cut -d " " -f1` |

nsenter进入sidecar容器的命名空间

1 | root@ubuntu:~# nsenter -n --target 9391 |

在该进程的命名空间下查看其 iptables 规则链

1 | 查看NAT表中规则配置的详细信息 |

参考

- https://istio.io/latest/zh/docs/

- https://github.com/iovisor/bcc/blob/master/docs/reference_guide.md

- https://arthurchiao.art/blog/cgroupv2-zh/

- https://arthurchiao.art/blog/bpf-advanced-notes-1-zh/

- https://www.zsythink.net/archives/1199

- https://github.com/istio/istio/tree/master/samples

- https://jimmysong.io/blog/envoy-sidecar-injection-in-istio-service-mesh-deep-dive/

- https://buaq.net/go-78524.html

- https://kubernetes.io/zh/docs/reference/access-authn-authz/rbac/

- https://manpages.ubuntu.com/manpages/focal/man7/bpf-helpers.7.html

- https://github.com/torvalds/linux/blob/master/tools/testing/selftests/bpf/progs/sockopt_sk.c

- https://github.com/torvalds/linux/blob/cfb92440ee71adcc2105b0890bb01ac3cddb8507/include/uapi/linux/netfilter_ipv4.h#L52

- https://www.cnblogs.com/tencent-cloud-native/p/15696518.html