What is Cilium?

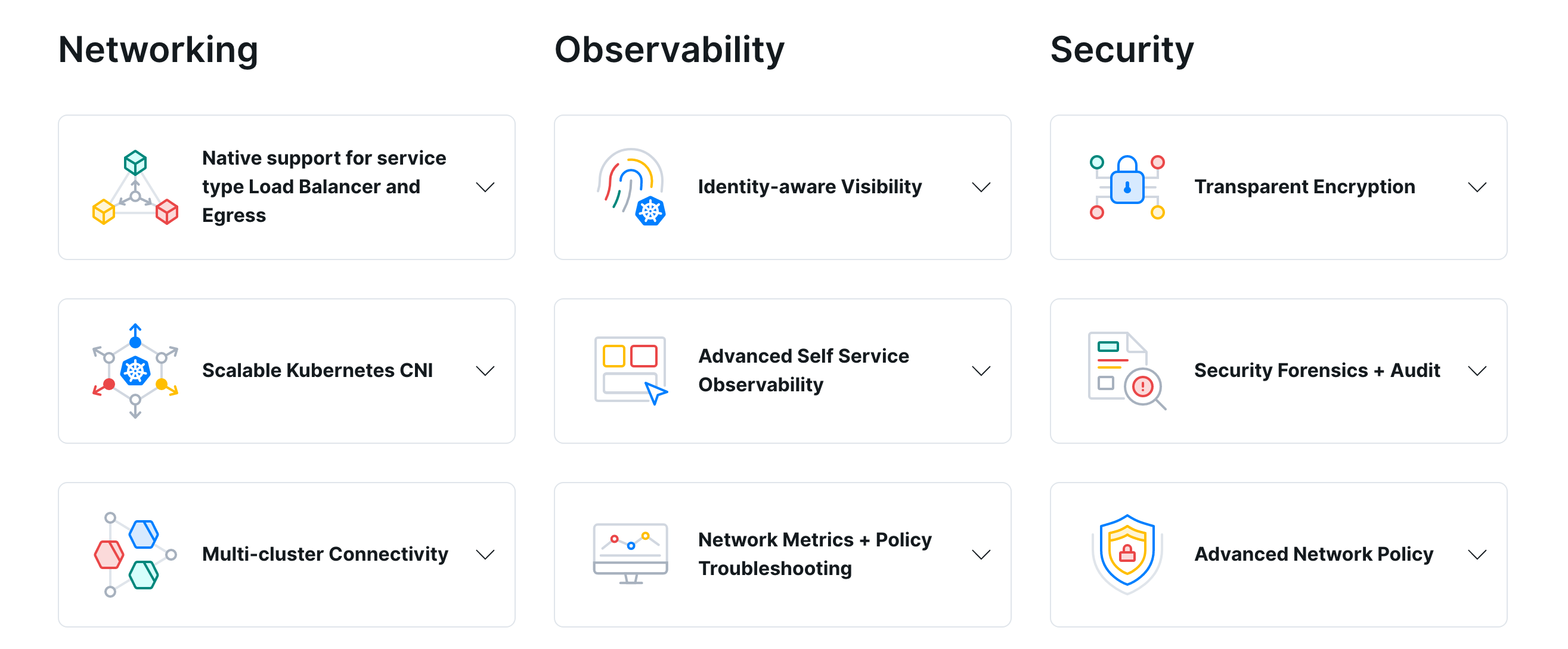

Cilium is open source software for transparently securing the network connectivity between application services deployed using Linux container management platforms like Docker and Kubernetes.

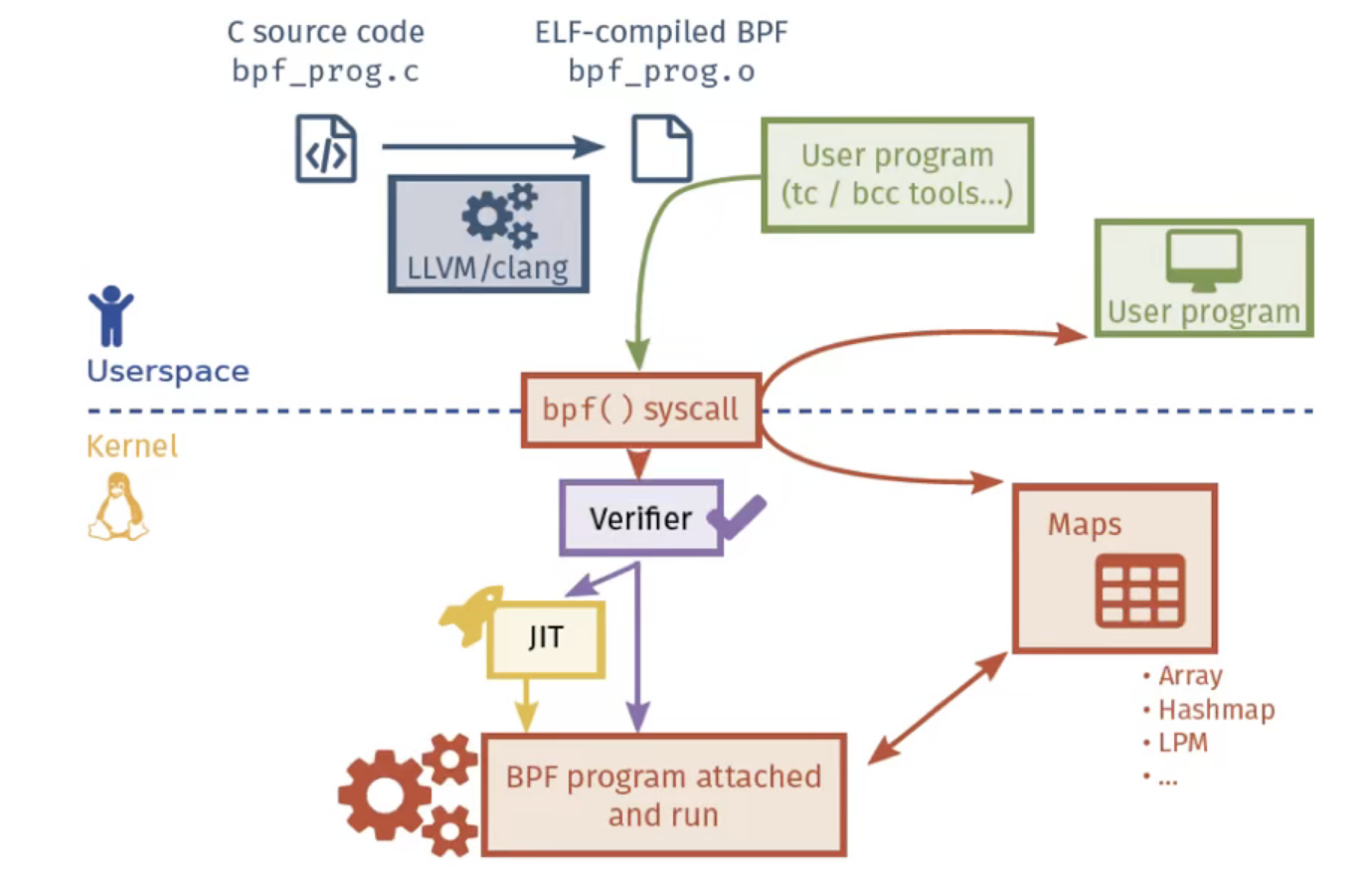

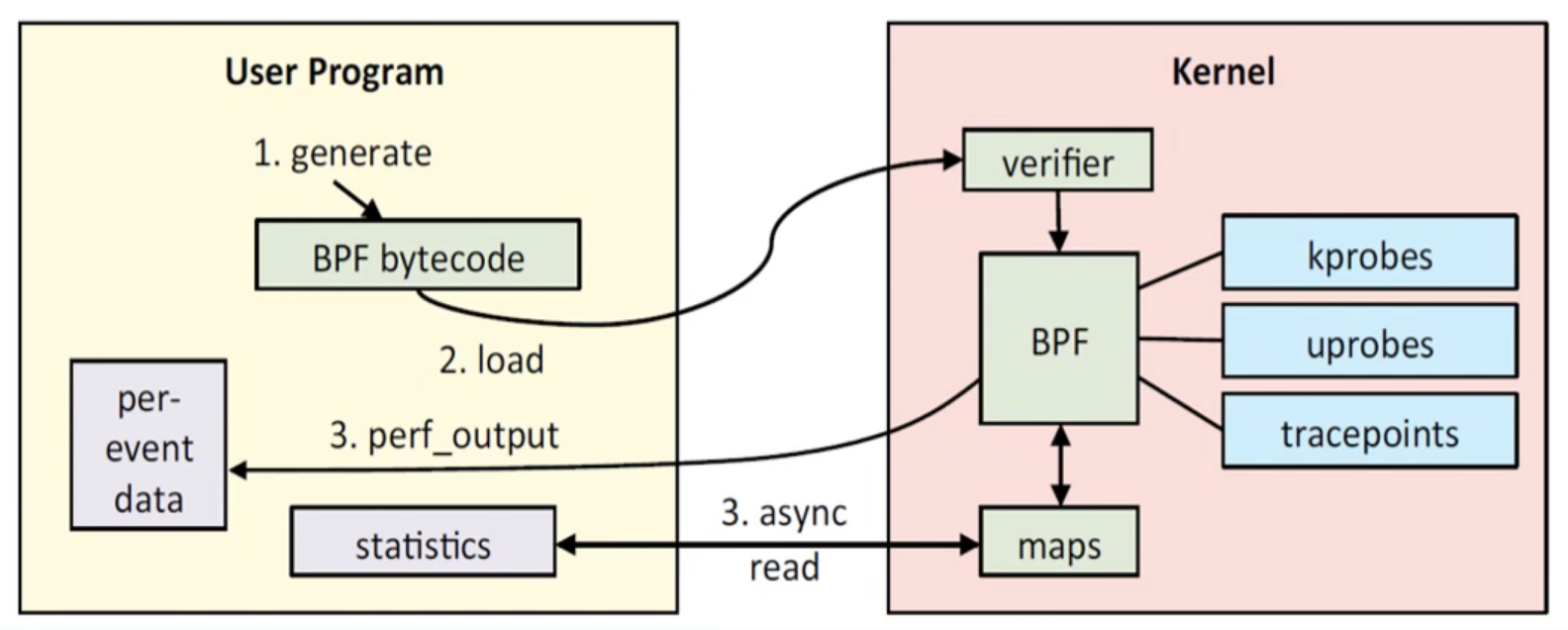

At the foundation of Cilium is a new Linux kernel technology called BPF, which enables the dynamic insertion of powerful security visibility and control logic within Linux itself. Because BPF runs inside the Linux kernel, Cilium security policies can be applied and updated without any changes to the application code or container configuration.

Why Cilium?

eBPF Architecture

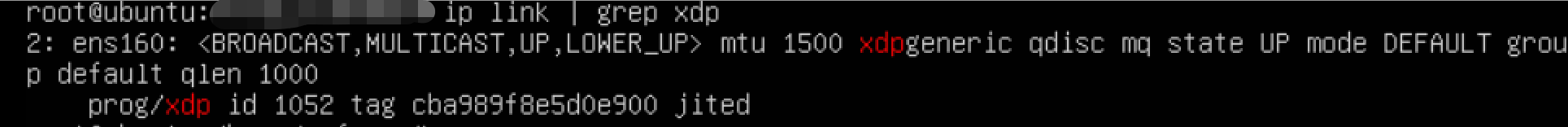

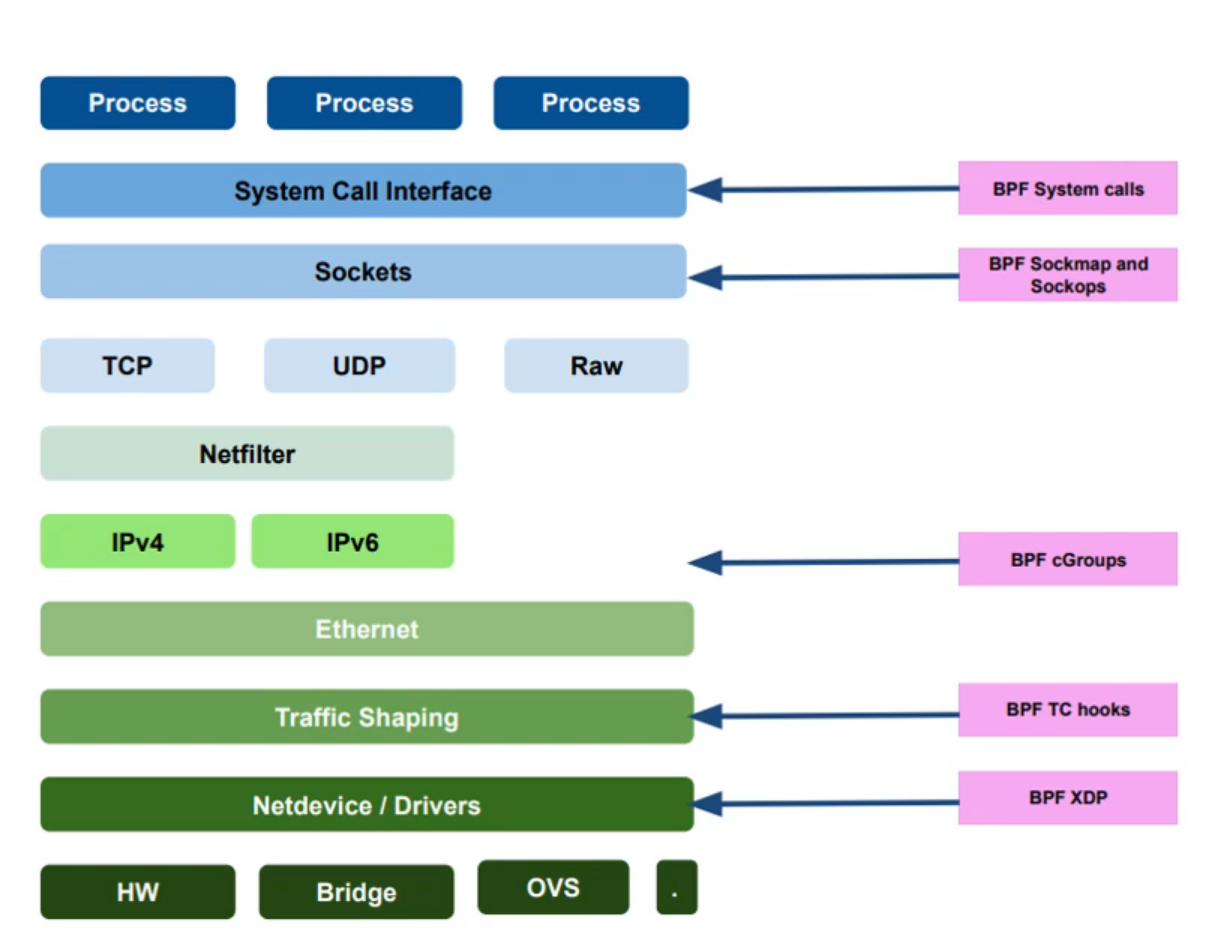

XDP

Cilium方案中大量使用了XDP、TC等网络相关的BPF hook,以实现高性能的网络RX和TX。

XDP全称为eXpress Data Path,是Linux内核网络栈的最底层。它只存在于RX路径上,允许在网络设备驱动内部网络堆栈中数据来源最早的地方进行数据包处理,在特定模式下可以在操作系统分配内存(skb)之前就已经完成处理。

尝试写一个xdp代码,名为xdp-example.c

1 |

|

查看整个编译过程都做了哪些事情

1 | root@ubuntu:~# clang -ccc-print-phases -o2 -Wall -target bpf -c xdp-example.c -o xdp-example.o |

1 | root@ubuntu:~# file xdp-example.o |

加载xdp程序

首先查看主机的网卡设备列表

1 | root@ubuntu:~# ip a |

加载程序之前ping虚拟机

1 | caoyifan@MacBookPro ~ % ping 192.168.19.84 |

加载xdp程序到网卡设备

1 | root@ubuntu:~# ip link set dev ens160 xdp obj xdp-example.o |

检查是否已经挂载到ens160网卡

加载程序之后ping虚拟机

1 | caoyifan@MacBookPro ~ % ping 192.168.19.84 |

卸载xdp程序

1 | root@ubuntu:~# ip link set dev ens160 xdp off |

内核跟踪

动态追踪历史

严格来讲 Linux 中的动态追踪技术其实是一种高级的调试技术, 可以在内核态和用户态进行深入的分析, 方便开发者或系统管理者便捷快速的定位和处理问题

如下表所示, 为 Linux 追踪技术的大致发展历程

| 年份 | 技术 |

|---|---|

| 2004 | kprobes/kretprobes |

| 2005 | systemtap |

| 2008 | ftrace |

| 2009 | perf_events |

| 2009 | tracepoints |

| 2012 | uprobes |

| 2015 | eBPF |

tracepoint

tracepoints是散落在内核源码中的一些hook,它们可以在特定的代码被执行到时触发,这一特定可以被各种trace/debug工具所使用。

perf将tracepoint产生的时间记录下来,生成报告,通过分析这些报告,条有人缘便可以了解程序运行期间内核的各种细节,对性能症状做出准确的诊断。

安装perf工具

首先下载内核对应的源码包:https://mirrors.edge.kernel.org/pub/linux/kernel/

1 | root@ubuntu:~/linux-5.8/tools/perf# uname -r |

1 | root@ubuntu:~/linux-5.8/tools/perf# ./perf list tracepoint |

用户空间下,ping命令的系统调用

1 | root@ubuntu:~/linux-5.8/tools/perf# strace -fF -e trace=network ping 114.114.114.114 -c 1 |

内核空间下,ping命令的系统调用

1 | root@ubuntu:~/linux-5.8/tools/perf# ./perf trace --event 'net:*' ping 114.114.114.114 -c 1 > /dev/null |

除此之外,还可以通过perf查看系统调用函数使用cpu的情况

1 | root@ubuntu:~/linux-5.8/tools/perf# ./perf top |

uprobes

uprobe是用户态的探针,它和kprobe是相对应的,kprobe是内核态的探针。uprobe需要制定用户态探针在执行文件中的位置,插入探针的原理和kprobe类似。

准备一个测试代码demo.c

1 |

|

编译并运行

1 | root@ubuntu:~/uprobe# gcc demo.c -o demo |

这个时候如果程序出现问题,我们就可以通过uprobe查看相关信息。

首先通过objdump查看相关段的信息

1 | root@ubuntu:~/uprobe# objdump -t demo | grep print |

添加程序相关信息到uprobe点

1 | root@ubuntu:~/uprobe# cat /sys/kernel/debug/tracing/uprobe_events |

打开日志追踪

1 | root@ubuntu:~/uprobe# echo 1 > /sys/kernel/debug/tracing/events/uprobes/enable |

查看日志信息

1 | root@ubuntu:~/uprobe# cat /sys/kernel/debug/tracing/trace |

关闭及清理

1 | root@ubuntu:~/uprobe# echo 0 > /sys/kernel/debug/tracing/events/uprobes/enable |

kprobe

Kprobe是一种内核调测手段,它可以动态地跟踪内核的行为、收集debug信息和性能信息。

查看do_sys_open系统调用的详细信息

1 | root@ubuntu:~# echo 'r:myprobe do_sys_open' > /sys/kernel/debug/tracing/kprobe_events |

打开日志追踪

1 | root@ubuntu:~# echo 1 > /sys/kernel/debug/tracing/events/kprobes/enable |

查看调用信息

1 | root@ubuntu:~# cat /sys/kernel/debug/tracing/trace |

网络跟踪

- BPF XDP

- BPF TC hooks

- BPF Cgroups

- BPF sockmap and sockops

- BPF system calls

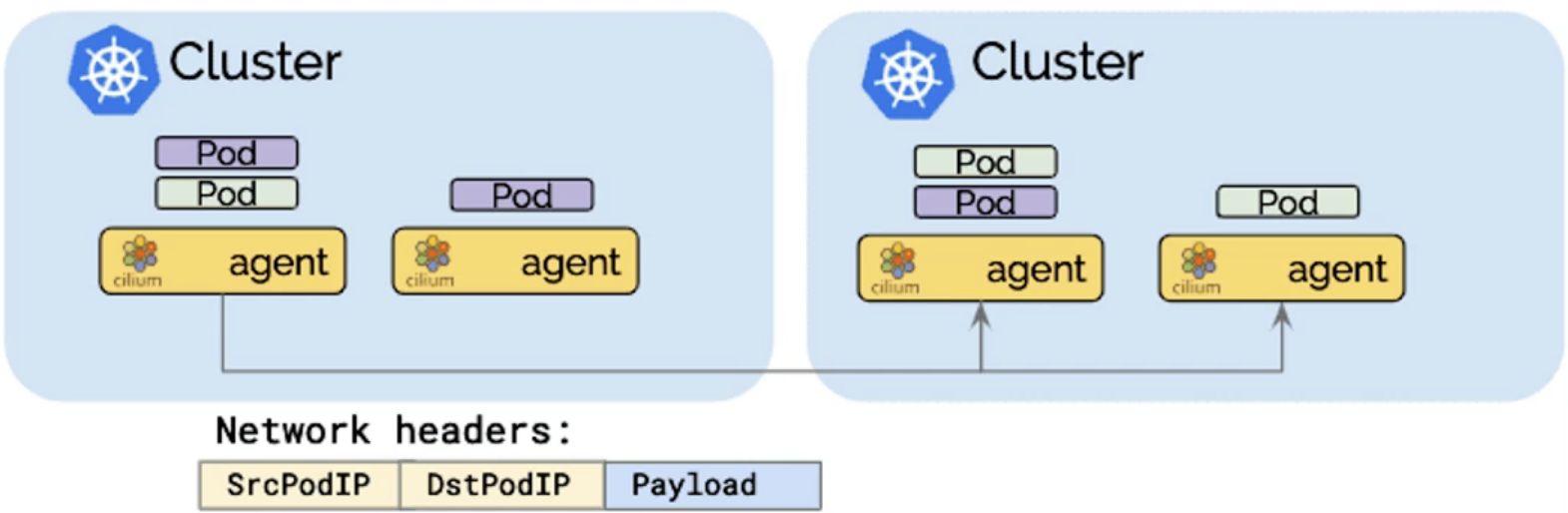

Cilium vs Kube-router

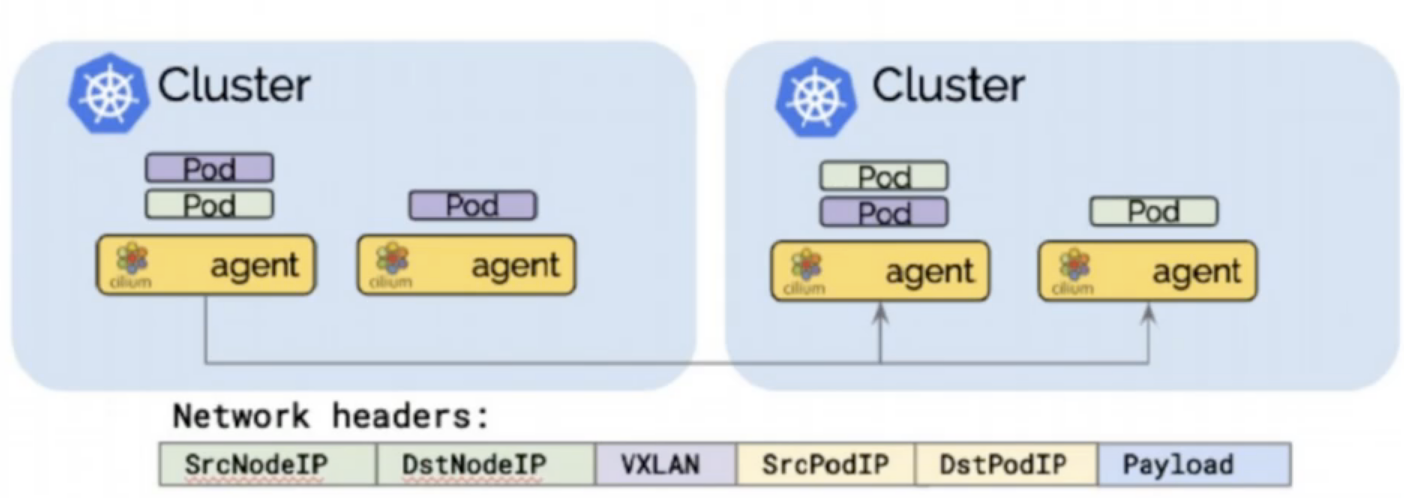

VxLan

VxLan(Virtual eXtensible Local Area Network,虚拟可扩展局域网),是一种虚拟化隧道通信技术。它是一种 Overlay(覆盖网络)技术,通过三层的网络来搭建虚拟的二层网络。

简单来讲,VxLan是在底层物理网络(underlay)之上使用隧道技术,借助 UDP 层构建的 Overlay 的逻辑网络,使逻辑网络与物理网络解耦,实现灵活的组网需求。它对原有的网络架构几乎没有影响,不需要对原网络做任何改动,即可架设一层新的网络。也正是因为这个特性,很多CNI插件会选择 VxLan 作为通信网络。

VxLan 不仅支持一对一,也支持一对多,一个 VxLan 设备能通过像网桥一样的学习方式学习到其他对端的 IP 地址,还可以直接配置静态转发表。

VxLan常见术语

VTEP(VXLAN Tunnel Endpoints,VxLan 隧道端点)

VxLan 网络的边缘设备,用来进行VxLan报文的处理(封包和解包)。VTEP 可以是网络设备(比如交换机),也可以是一台机器(比如虚拟化集群中的宿主机)。

VNI(VxLan Network Identifier,VxLan 网络标识符)

VNI是每个 VXLAN 段的标识,是个 24 位整数,一共有16777216个,一般每个VNI对应一个租户,也就是说使用VxLan搭建的公有云可以理论上可以支撑千万级别的租户。Tunnel(VxLan 隧道)

隧道是一个逻辑上的概念,在 VxLan 模型中并没有具体的物理实体向对应。隧道可以看做是一种虚拟通道,VxLan 通信双方认为自己是在直接通信,并不知道底层网络的存在。从整体来说,每个 VxLan 网络像是为通信的虚拟机搭建了一个单独的通信通道,也就是隧道。

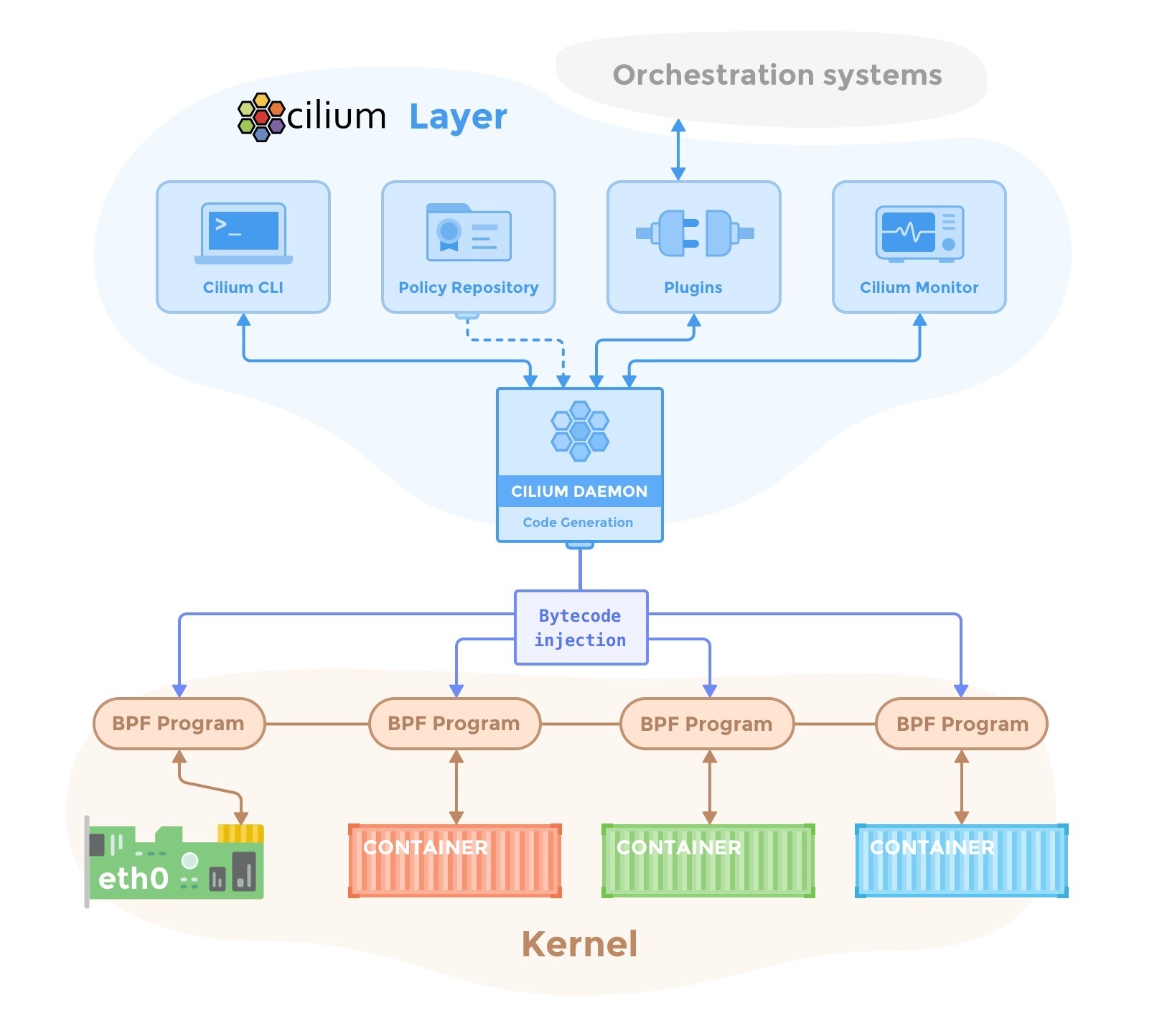

Cilium组件

Cilium agent

- 以Node为单位

- 采用DaemonSet方式部署

- 通过CNI插件与CRI和Kubernetes交互

- 采用IPAM地址分配方式

- 生成eBPF程序,编译字节码,Attach到内核

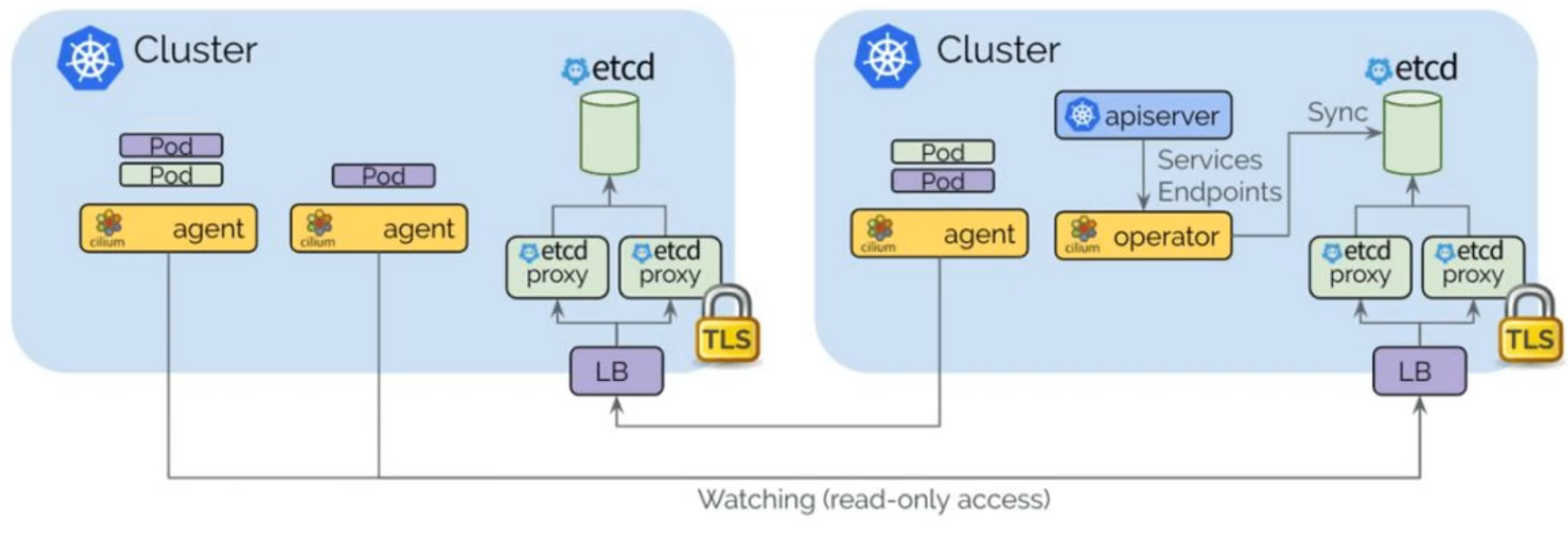

Cilium operator

Cilium控制平面

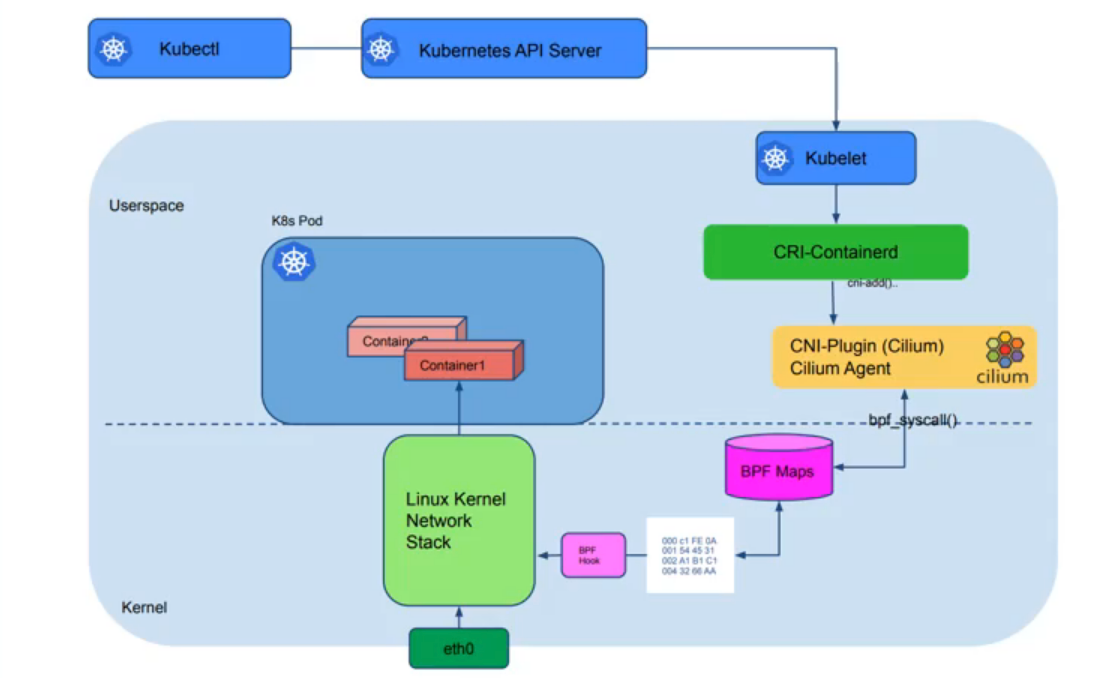

创建一个Pod的流程

- kubectl将对应的请求发给API Server

- API Server将对应的pod信息写到etcd中

- Scheduler服务会watch API Server,选择合适的节点

- kublet调用CRI-Containerd创建容器

- 创建对应的容器网络,调用CNI-Plugin,即调用Cilium agent

- Cilium agent创建对应的网络,调用

bpf_syscall()

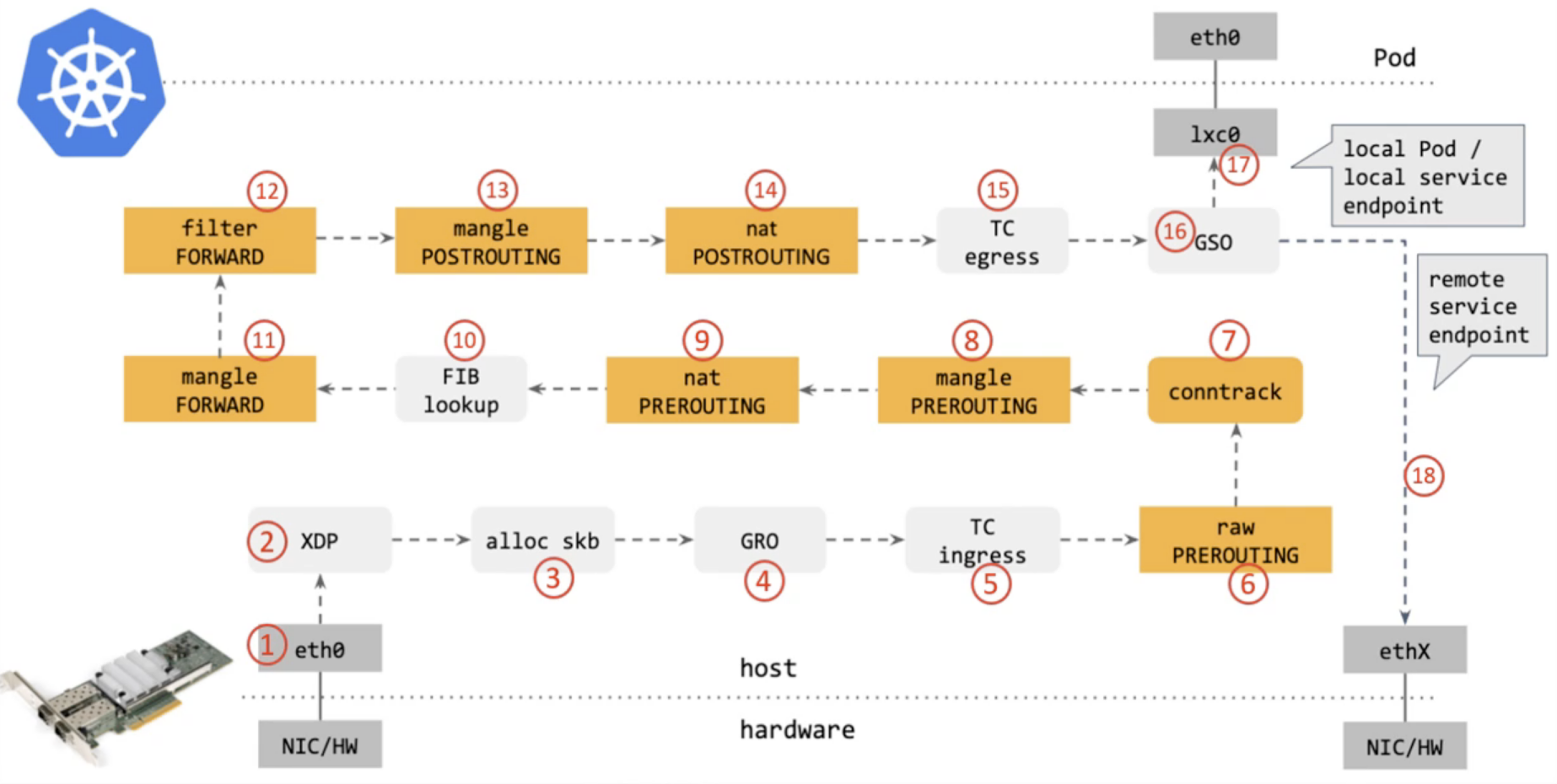

Cilium数据平面-ipvs/iptables

从网卡到Pod经历了哪些点

- 经过eth0,此时数据放在Ring Buffer中,内核会通过NAPI轮训调取Ring Buffer数据

- 经过XDP,对数据进行PASS、DROP等操作,前提是网卡支持XDP

- 内核给数据分配skb,skb是网络在内核中的结构体

- 经过GRO,将数据包进行组合封包,提升网络吞吐

- 经过TC ingress,包括流量限速,流量整形,策略应用等操作

- 经过Netfilter

- 经过TC egress,出口流量的队列调度等操作

- 经过GSO,将大封包转为小封包

- 本地流量走第17步,远程流量走第18步

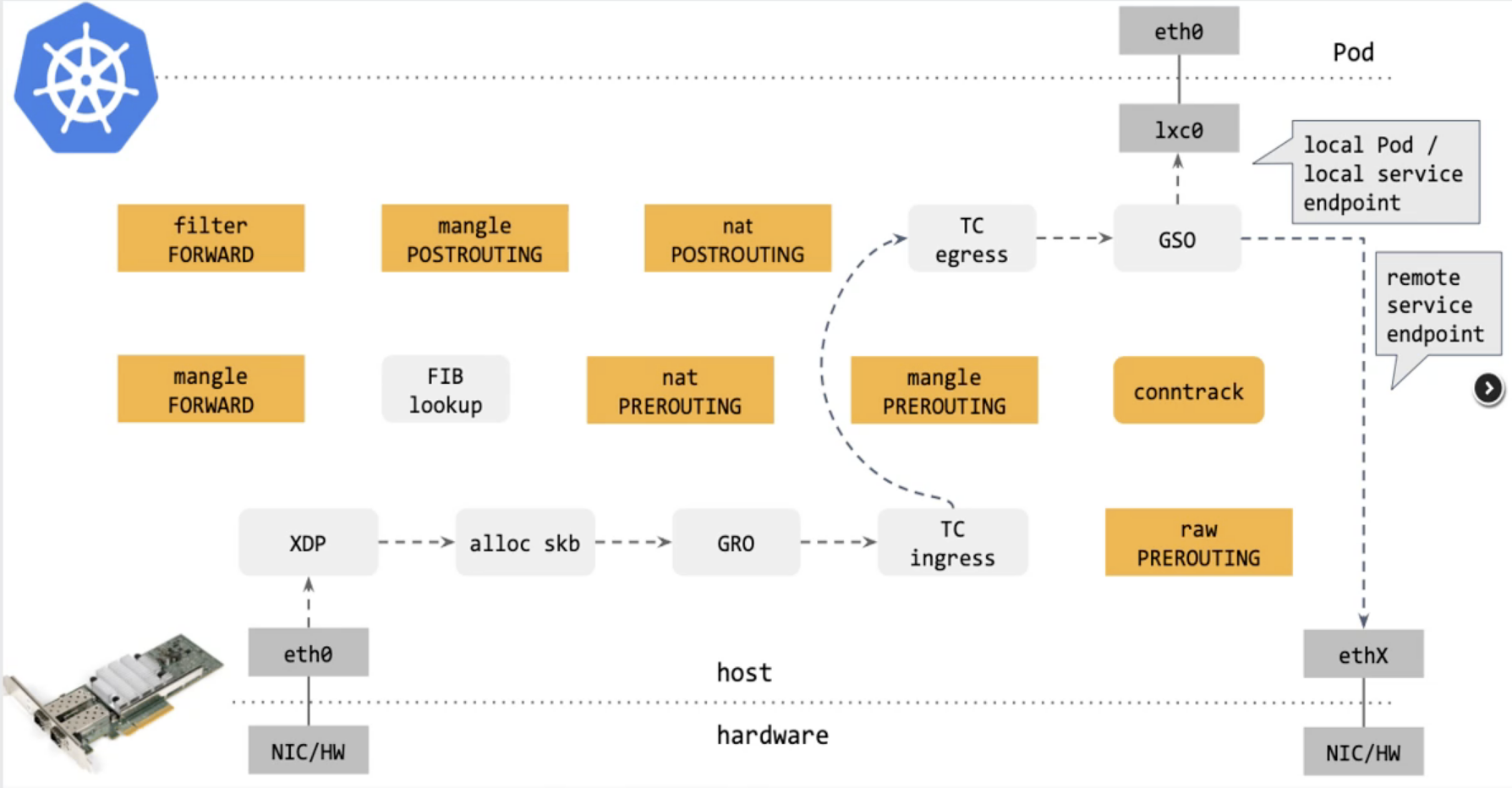

Cilium数据平面-eBPF

Cilium数据平面-service转发

- 南北向流量:XDP或TC

- 东西向流量:BPF socket

Cilium数据转发与tc hook

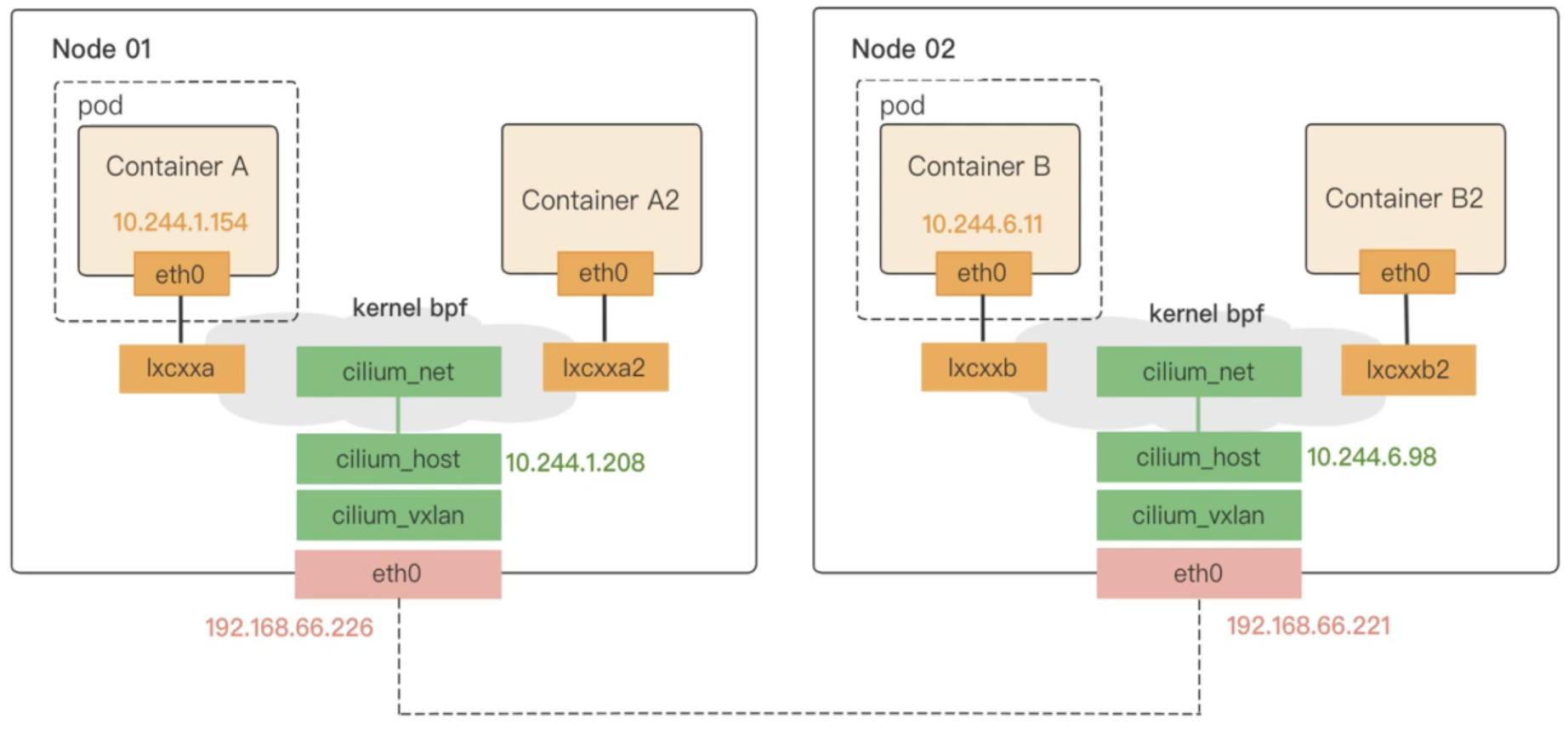

Cilium 在主机网络空间上创建了三个虚拟接口:ciliumhost、ciliumnet和ciliumvxlan。Cilium Agent 在启动时创建一个名为“ciliumhost -> ciliumnet”的 veth 对,并将 CIDR 的第一个IP地址设置为 ciliumhost,然后作为 CIDR 的网关。CNI 插件会生成 BPF 规则,编译后注入内核,以解决veth对之间的连通问题。

Cilium组网模式-VxLan

Cilium组网模式-BGP router